Built Environment Informatics Lab

The Moss and Associates Built Environment Informatics Lab (BEIL) located at EC 2740 is equipped with cutting-edge visualization and surveying technologies to facilitate teaching and research. The BEIL is the host to the following resources,

Resources available

-

The WorldViz CornerCave come with 3D visualization system involvingadvanced head motion and hand interaction tracking, stereo glasses, tracking cameras and short range projectors. It instantly turns the room into an immersive 3D experience theatre.

The system can be controlled by the navigation wand and also picks the beacons on the 3d glasses and wand to track user’s position in the system.

Multiple students can collaborate in the system at once. However, it should be noted that the cameras can only track the person with the beacons. The rest of the users will not be able to control the view but can participate and view the content in 3D.

The WorldViz CornerCave system at BEIL has a capacity to handle 10 users at once.

-

The BEIL is also equipped with Oculus Rift DK2 headset with motion camera. The headset system is portable and will be used predominantly in this project. The steering wheel simulator, available at the lab, will enable simulating a construction equipment operator scenario where the users can operate a piece of construction equipment in the virtual system. Data pertaining to the users’ behavior and input can be recorded for scientific analysis.

Asides from Oculus Rift, the lab also features cardboard-based headset systems. These headsets are inexpensive devices that each student can get their own device for the demos. Because they are constructed from cardboard, these devices do not provide as interactive a platform for virtual environment, as compared to Oculus headsets. These headsets can be used when demonstrations do not require much interaction with the virtual scenario.

-

Faro Focus 3D X130 laser scanner available at BEIL is used to scan the geometry of the site. Virtual reality environment and test scenarios are built based on the laser scan data. The laser scanner is also made available to the students for their projects. This provides an opportunity for them to learn about the latest development in surveying technology as well as give them a hands-on experience working with large point cloud data sets.

-

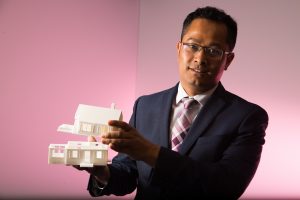

MakerBot Replicator 3D Printer is used for student learning activities. 3D printers are specially helpful for DIY projects that include hardware where students need to print cases for their hardware to protect the electric wiring.

3D printed models are also helpful to clarify on concepts that the students otherwise struggle to grasp.

-

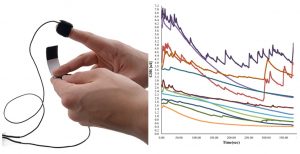

Physiological data from workers are used to reveal valuable information about the human aspect of construction safety.

Physiological data like posture and other vital stats help us understand the health status of the workers and assist in exploring the underlying reasons of accidents.

Affective sensing technologies like Galvanic Skin Response technologies expose the effect of emotional capabilities of individual in impromptu decision making and hazard avoidance. Image below shows emotional reactions of different individuals when exposed to same virtual construction scene with multiple hazards.

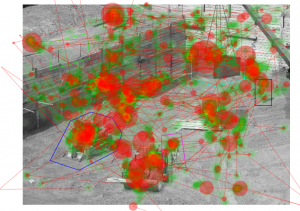

Eye-tracking technology can track the gazing pattern and assist in understanding how each individual look at the same scenario (environment) in a different way and how this pattern can impact their situation awareness. Image below shows how a subject gazed through an image when assigned to recognize hazards in a picture taken from a construction site. The radius of the circles reflect the time spend in that area.

-

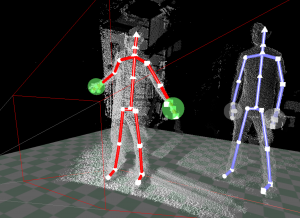

Depth cameras are used to track human movement for activity analysis as well as to create animations in virtual reality.

A Structure sensor is used to scan human body from which realistic 3D avatars are created.

360 cameras are used to capture situations of interest in a VR ready format. The pictures and video captured from 360 cameras serve as a great tool for education and research because the spherical recording and not limited by camera’s field of view. The video below is an example of a bobcat operator’s field of view (shot in collaboration with Emmanuel Okwor, Grelite Corp http://www.grelite.net) and is used to demonstrate the blind spots of the equipment. The video can be viewed in any mobile devices with a gyroscope or cardboard VR devices.

-

A Lynxmotion Robotic Arm is available for the students to work on automated assembly of 3D printed miniature building elements. The robotic arm can be programmed to work manually, automatically or in combination. IR sensors and depth cameras can be used to sense the environment for automatic assembly. A mock site looks like this where a truck hauls the parts to the site and the arm assembles the parts off the truck.

The robotic arm can be programmed to work within multiple constraints to assemble a structure based on a Building Information Model (BIM).

DIY kits are also available for development of tracking devices for the experiments and for development of prototypes for home automation. The kits are also used for educational purposes to teach DIY projects to the students.